Have you ever felt a large language model's (LLM) response was a bit off the mark? Maybe it lacked specific details or missed the nuance of your query. Well, fret no more! The future of search is here, and it involves a powerful duo: vector search and Retrieval-Augmented Generation (RAG).

What's the Deal with Vector Search?

Imagine a vast library of information, each piece of knowledge with a unique fingerprint. That's essentially what vector search offers. It uses mathematical representations called vector embeddings to capture the essence of information. When you ask a question, vector search finds documents in the library with fingerprints most similar to your query.

How Does RAG Elevate the Game?

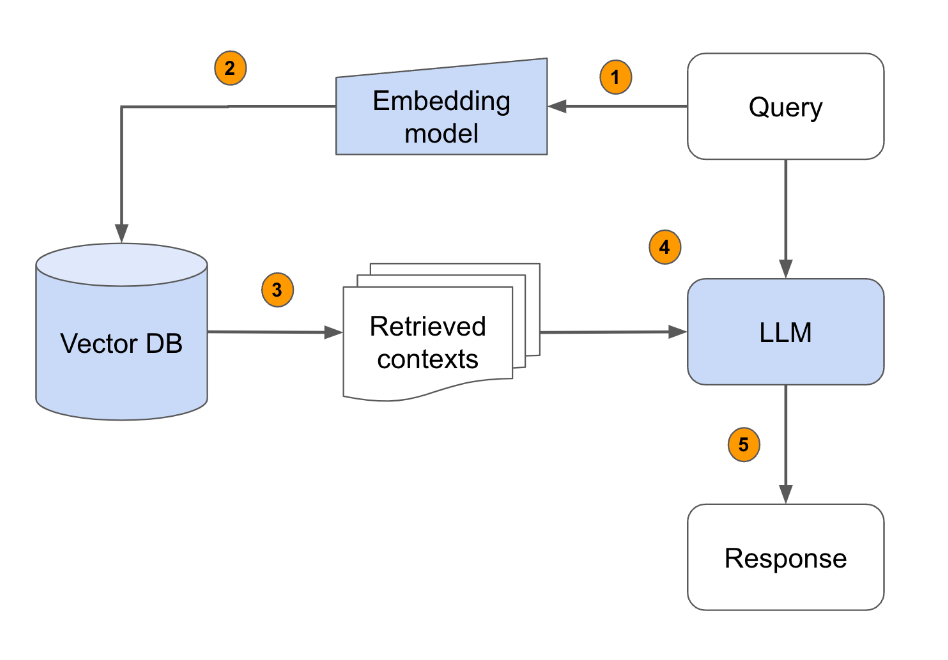

RAG takes search to a whole new level by combining vector search with the power of LLMs. Here's how it works:

Understanding Your Needs: RAG starts by analyzing your question using the LLM.

Diving Deep with Vector Search: The LLM then partners with vector search to find relevant documents in the knowledge base based on the query's meaning, not just keywords.

Crafting the Perfect Response: Finally, the LLM leverages the retrieved documents to generate a comprehensive and informative answer that addresses the specific needs of your query.

The Benefits of this Dynamic Duo

By combining vector search and RAG, you get:

More Relevant Answers: Vector search ensures the LLM has access to the most pertinent information, leading to more accurate and insightful responses.

Contextual Understanding: RAG goes beyond keywords to grasp the underlying meaning of your query, resulting in nuanced and informative answers.

Knowledge Base Power: You can leverage any existing knowledge base, like a company's documentation or scientific research papers, to enrich LLM responses.

Real-World Applications of RAG and Vector Search

This powerful combination can be used in various applications:

Customer Service Chatbots: Imagine chatbots that can not only answer basic questions but also delve deeper into knowledge bases to provide specific solutions.

Intelligent Search Engines: Search engines that understand the intent behind your search and deliver results that truly address your needs.

Content Creation Tools: AI assistants that can help writers and researchers find relevant information and generate high-quality content.

The Future of Search is Bright

Vector search and RAG are revolutionizing the way we interact with information. By combining the power of LLMs with the efficiency of vector search, we can unlock a future of more relevant, insightful, and personalized search experiences. So, buckle up and get ready for a search revolution!

Some more Links:

Thank you for reading till here. If you want learn more then ping me personally and make sure you are following me everywhere for the latest updates.

Yours Sincerely,

Sai Aneesh